Love them or hate them, research metrics are a key element of the scientific ecosystem. In this Advanced Science News special series, we will be taking a look at a range of different metrics, the ways in which they are calculated, their strengths, and their weaknesses.

The first cab off the rank is the original, and arguably still the most widely respected, Journal Impact Factor (JIF). In 1975 the Institute for Scientific Information published the ‘Journal Citation Report’. This report was based on over 4.2 million references published in around two and a half thousand journals.

The Journal Citation Report was designed with two audiences in mind; librarians and researchers. The founder of the Institute for Scientific Information (ISI), Eugene Garfield argued that the measure could help librarians decide which scholarly journals were worth subscribing to, since “The more frequently a journal’s articles are cited, the more the world’s scientific community implies that it finds the journal to be a carrier of useful information”.

Garfield also argued that his journal metrics could be useful in helping researchers determine which journals would be most appropriate for publishing their work.

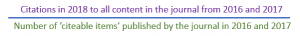

Today, the calculation of the JIF is carried out by Clarivate Analytics, using the Web of Science database. While there are numerous related metrics, the focus of this article will be the journal two year impact factor. This number is calculated by a deceptively simple formula, for 2018 it would read:

The numerator in this equation is relatively straightforward; it simply represents the number of citations to a journal that are found in the Web of Science ‘Core Collection’.

The denominator refers to the number of ‘Citable Items’. This is not the same as the number of manuscripts published by a journal. Broadly speaking, a citable item is research or review content. Non-citeable items by comparison, are editorial or news content.

However, the delineations between these two categories can sometimes blur, and this blurring can have a significant impact on the JIF. For example, between 2002 and 2003 the JIF of the journal Current Biology rose sharply, in part due to a change in how the denominator was calculated.

It is generally accepted that this equation is able to, at the very least, provide a rough guide for librarians when making choices about which journals to subscribe to. There is however one important caveat even within this limited definition of JIF utility—the JIF should never be used to compare journals from different disciplines. Numerous studies have shown that different subject areas have very different citation profiles over time.

Due to the limited window of the JIF, changes in citation profiles severely impact the JIF. For example, in 2015 in the field of clinical medicine, the mean age of cited literature in Web of Science was 9.77 years. In mathematics the average was 16.65 years. Since the JIF only takes into account citations in the two years after publication, clinical medicine journals are likely to have higher JIFs than their mathematical counterparts.

The utility of JIFs outside of the library is more contested. It is well known that some scientific funding agencies pay attention to the impact factor of journals to which researchers publish manuscripts. Inherent in this interest is an assumption that the JIF of the journal to which a manuscript is submitted reflects the influence of the work or of the author. While there are a range of factors that render this assumption problematic, there is one key characteristic of the JIF that renders such use completely invalid.

In 2004 the editorial team at Nature analyzed the impact that individual papers had on the journal’s impact factor. It was found that 89% of the 2004 impact factor was generated by just 25% of the content. While the JIF for that year was 32.2, the vast majority of papers from the two year JIF window received less than 20 citations. The skew was facilitated by manuscripts like the one that reported the mouse genome, which received 522 citations in that year. This phenomenon has been shown to be reproduceable in a range of journals. Given these facts, it cannot possibly be argued that the impact or influence of a given article on its field is any greater simply because of the high JIF of the journal that it is published in.

When used correctly, the JIF can certainly be a useful tool for assessing the influence of a particular journal within a given field. However, due to the limitations of the JIF in assessing the impact of manuscripts or scientists in their field, a range of other metrics have been devised to better manage comparisons. Over the coming weeks we will be exploring some of these metrics, and assessing their advantages and disadvantages.

The next two articles in the “Research Metrics” series are out now, on altmetrics and the h-index!