The world has witnessed major technological advances in the last decade. Wifi and smartphones are now ubiquitous, and being “connected” implies that we are plugged into our devices 24/7 rather than engaging in real-life, human-to-human interactions.

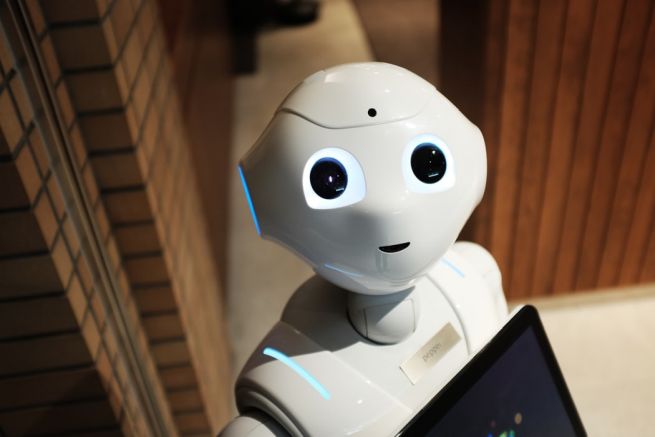

The capabilities of artificial intelligence (AI) have also grown rapidly, and researchers continue to bring futuristic concepts that once only existed in our imaginations or sci-fi movies to reality. For example, AI now has the ability to diagnose disease and even predict the likelihood of death by scanning electrocardiogram results.

Although the concept of AI was first proposed in the 1950s, it has only relatively recently entered the public eye, especially with the launch of Google’s DeepMind and OpenAI, two leading AI research companies established in 2010 and 2015, respectively.

An early example of game-changing AI was IBM’s Deep Blue, a chess-playing computer, which made headlines in 1997 when it beat the world champion at the time in a gripping six-game, two-day match.

Since then, DeepMind’s AlphaZero gained mainstream attention in 2017 as the first learning algorithm to beat a professional player at the games Go, chess, and shogi, marking another major milestone in AI development.

This impressive example of “narrow AI,” which is AI designed to accomplish a particular task, used a deep neural network that trains itself through reinforcement learning. This process allowed for a highly dynamic style of play—more akin to a human than a computer—which had not yet been realized by AI and thoroughly impressed the game masters it faced.

OpenAI, co-founded by Elon Musk, focuses on developing safe artificial general intelligence (AGI), which refers to “highly autonomous systems that outperform humans at most economically valuable work.” In other words, AGI is a more advanced version of AI in which a system can adapt to any generalized task demanded of it.

OpenAI’s concept raises more than a few interesting questions, and their emphasis on “safe” AGI necessarily implies that the technology has the potential to be unsafe. If the universally agreed-upon purpose of intelligent systems is to advance scientific discovery and benefit humanity, what could possibly go wrong?

Mass unemployment as a side effect of implementing AI in the workplace is one obvious concern (although, conversely, AI could create new types of jobs, leaving the grunt work for the machines), but aside from possibly rendering humans useless, there are even bigger worries.

Thanks to the Terminator film series, my immediate thoughts about the future of AI are dystopic—I can’t help but think of machines designed for the sole purpose of eradicating mankind, and I’m sure I’m not the only one imagining a future scenario of Skynet-borne cyborg assassins.

As a major proponent of the AI-gone-rogue theory, or in the best-case scenario, that humans will be “left behind” rather than eliminated, Elon Musk’s solution for potentially preventing the “existential threat of AI” is NeuraLink.

Founded by Musk in 2016, the focus of the company’s research is to develop technology that merges our brains with AI so that we can experience a kind of “symbiosis with artificial intelligence.” Musk stresses that the technology would be presented only as an option to individuals with the desire to have this experience; it would certainly not be forced upon those opposed to the idea.

Hypothetically, this feat would be accomplished by a quick and painless insertion of a chip into the brain, and tasks could be accomplished by the power of thought alone, possibly enabling abilities as far-fetched as telepathy among humans. A more tangible benefit of the chip is to potentially solve neurological disorders like Parkinson’s disease or Alzheimer’s, but both achievements are likely far off given the novelty of the technology, which was first unveiled earlier this year.

Perhaps a more probable risk of AI is that it falls into the wrong (human) hands, so to speak. For example, malicious use of AI could play out in the form of terrorists deploying autonomous weapons or hacking large-scale operations controlled by AI that could affect an entire society’s infrastructure, including energy and transportation, causing chaos and destruction.

Online tracking and the use of personal data by companies to target our exposure to certain information and advertisements via algorithms is already a very real privacy issue. In the wrong hands, AI could also make short work of damaging an individual’s reputation by uncovering private information and spreading it online to the masses.

The introduction of bias into an AI system is another interesting risk that has been encountered recently. Google’s BERT, a universal language model that determines how humans put words together to aid search engines, for example, learns from huge amounts of data that inevitably contain embedded biases. This means that BERT may associate certain words with one gender over the other or that a particular name always has a negative connotation, no matter the context.

Undoubtedly, this is only a fraction of the foreseeable risks of AI, but of course this does not mean we should not pursue the technology. There are numerous possibilities for its future—good and bad.

But if super-intelligent machines gain a mind of their own and happen to decide we’re dispensable, well, it’s on us.

To read more of the most significant science stories of the last decade, check out our Science of the 2010s series.