Science has an image fraud problem. A 2018 analysis of biomedical literature estimated that up to 35,000 papers may need retracting due to improper image duplication. Now, AI technology exists that generates fake images that are next to impossible to detect by eye alone.

In an opinion piece published in the journal Patterns, computer scientist Ronshang Yu from Xiamen University in China demonstrates just how easy it is to generate what are known as “deepfakes”.

Training the AI

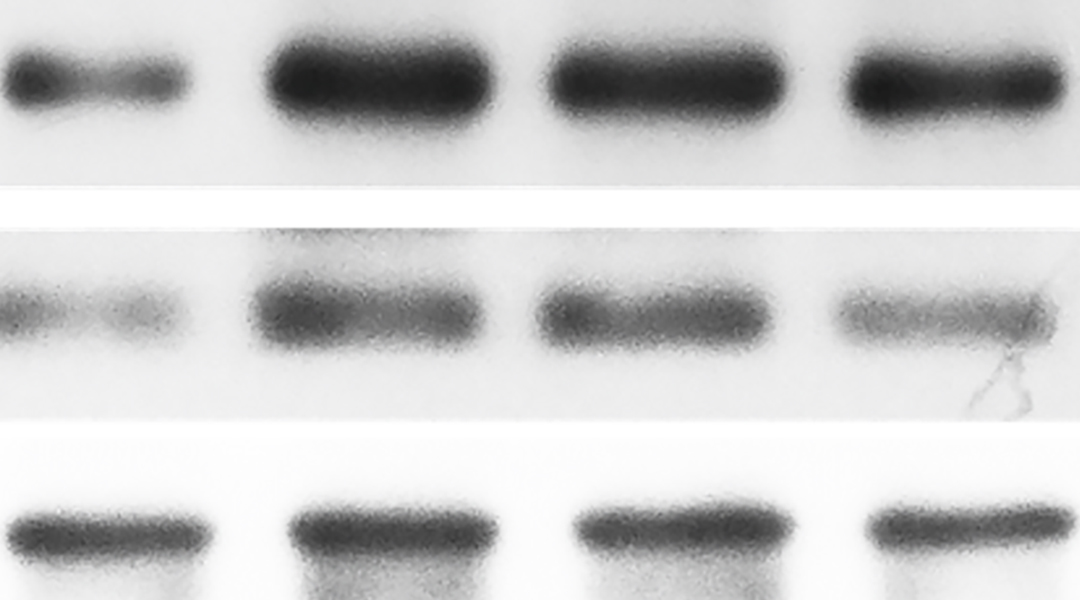

Yu and his collaborators created deepfakes of common pictures in biomedical literature: images of Western blots or cancer cells. They used an AI technology known as a generative adversarial network (GAN), which consists of two algorithms. The first generates the fake images based on an input database of images while the second attempts to discriminate between the input images and the fakes, thereby training the first algorithm.

But these fakes are not duplicates or splices of the existing images. The first algorithm captures the statistical properties of the database images, everything from color to texture, and uses these to generate unique images. Once trained, these fakes can be next to impossible to detect by humans (for example, see the fake faces at this-person-does-not-exist.com).

GANs are freely available all over the internet, and according to Yu, not difficult or costly to run either. The deepfakes in the opinion piece were created by a bachelor’s student in his lab using an ordinary computer, and references images scraped from the web. For Yu, the goal was to warn the biomedical community with whom he collaborates on other imaging research.

Are we too late?

Elisabeth Bik, a microbiome and science integrity consultant and author of the 2018 analysis believes deepfakes are already present in the literature. “I would argue this has been ongoing since probably 2017, 2018,” she said. “I’ve seen papers that look like they have completely fake western blots.”

Her expertise in scientific image fraud comes from manually identifying image duplication or cropped images manipulated in a way that misrepresents the data. However, Bik says fake images are another level. “That’s even worse than fabricating something out of parts you already had, at least at some point, some experiments happened.” When it comes to deepfakes, no experiment need ever be run. “This is all artificially generated.”

Image fraud detection arms race

Fortunately, detectable differences between deepfakes and real images do exist, they just can’t be seen by eye. One example Yu describes is converting images to the frequency domain. Like sound, which has differing high and low frequencies, images can be analyzed this way too.

“If an image has more details, more edges then it has more high frequency components,” explained Yu. “If the image is more blurry or it doesn’t have many details, it has more low frequency components.” Training AI to spot the differences in frequency domain between fakes and real images could be one way to foil would-be fraudsters.

Both Yu and Bik believe that it will be impossible to catch all the fraud. As detection becomes better, so too will the next generation of fakes. “I’m not sure if we can ever make fraud like this go away by trying to catch it,” says Bik. “You can raise the bar in detection, but then they’ll raise the bar in generation.”

Bik does believe that journals should still try to make it harder to fake data, such as requiring things like uncropped images along with the smaller versions used in figures, asking research institutions to provide some evidence that the work happened there or watching for batch submissions with similar titles from the same email address.

“I don’t think we should, as editors or publishers, no longer rely on whatever is sent to us is real,” said Bik. “You have to really think about the possibility that 10% or 20% of what you’ll get is actually completely fake.”

Reference: Wang et al., Deepfakes: A new threat to image fabrication in scientific publications?, Patterns (2022). DOI: 10.1016/j.patter.2022.100509