Deep learning is a fascinating sub field of machine learning that creates artificially intelligent systems inspired by the structure and function of the brain. The basis of these models are bio-inspired artificial neural networks that mimic the neural connectivity of animal brains to carry out cognitive functions such as problem solving.

A field with the most impressive results of neuromorphic computing is that of visual image analysis. Similar to how our brains learn to recognize objects in order to make predictions and act upon them, artificial intelligence must be shown millions of pictures before they are able to generalize them in order to make their best educated guesses for images they have never seen before.

Professor Cheol Seong Hwang from the Department of Material Science and Engineering at Seoul National University and his research team have developed a method to accelerate the image recognition process by combining the inherent efficiency of resistive random access memory (ReRAM) and cross-bar array structures, two of the most commonly used hardware.

Many of us have performed a reversed image search to find information based on a certain image in order to browse similar results. To teach computers to carry out such tasks by recognizing objects, scientists have developed convolutional neural networks—named after the mathematical operation “convolution“—which has revolutionized this process by applying filters learned with little pre-process compared to other hand-engineered algorithms.

“In machine learning, image recognition is the first task, which is usually accomplished by [creating a] neural network,” says Hwang. ” A convolutional neural network is one of the most effective networks [for this], which uses the extracted features from images … to identify what it is. [They are] usually constructed by a computer program in conventional high-performance servers or laptops, which takes a huge amount of computing power.”

As Hwang mentioned, one of the greatest challenges of this field is the huge amounts of data that have to be transported back and forth between the system’s memory and processors, which requires lots of time and energy. To optimize this process, the design of the computer’s hardware is critical for maximizing memory capacity and calculation efficiency — and is exactly what Hwang and his team set out to do.

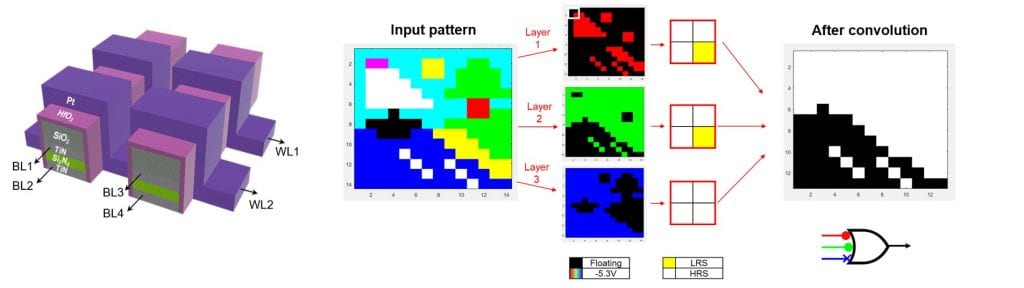

Cross-bar array structures consist of multiple crossing metal lines and memory cells at line intersections, while ReRAM are memory cells that store data in different resistance states. The energy required for data access and retention is very small compared to the contemporary memory, making ReRAM a promising candidate of a future technologies. Meanwhile, stacking memory through the vertical direction is already popular in the storage memory industry since it can achieve much higher data density in a confined area.

“Constituting a cross-bar array using ReRAM is a well-known strategy in the community,” says Hwang. “However, making a cross-bar array with stacked ReRAM [creating 3D configurations] is a relatively new idea.”

ReRAM-based cross-bar arrays have previously been reported to have a number of logistical problems, with the most critical among them being circuit instability. This study addresses many of these limitations by using a new ReRAM material.

The team combined elements of the two systems to create a two-layered stacked crossbar array, which was comprised of Pt/HfO2-x/TiN ReRAM cells. According to Hwang, these new structures have the potential to lead the future of neuromorphic computing.

“Pt/HfO2-x/TiN can mitigate many problems of the ReRAM-based cross-bar arrays, [such as] unwanted leakage current. It has an even simpler structure compared with the previous contenders, making them more competitive in production,” says Hwang.

In order to speed up the network’s image recognition process, the team also implemented a type of filter called kernel, which is used to extract specific features from a given image and is used extensively in the convolutional neural network. Fabricating the intended structure took several years, but through their hard work, combined experience and knowledge, Hwang and his co-workers were able to create a feasible method for circuit implementation of the convolutional neural network in neuromorphic hardware.

In terms of future directions: “We want to extend this research into a fully integrated system with [a larger cross-bar array] (at least 1kb) and multiple blocks and control circuits,” adds Hwang. “Perhaps the next step is to combine [our] circuit (as a synaptic block) with field programmable gate array chip (as a neuron circuit), but eventually we may want to integrate all of the circuit components in a form of application-specific integrated circuit.”