Fast, precise, capable of carrying out the same task millions of times with minimal error: this is a robot. Thanks to skills that go beyond the ordinary abilities of human beings, robots have become essential tools in our lives. But despite their “superpowers”, they manifest their greatest limitations when unknowns are introduced — in fact, when not in familiar and measured environments, robots suffer from the inability to adapt to their surroundings. Why does this happen?

Although robots can emulate human or animal activities, they lack what is called “embodied intelligence”. Our body gives us the ability to conform and actively interact with the environment around us. For example, the structure of our hand, and the strength in its muscles allow us to grab, lift, and hold different objects. Moreover, the receptors in our skin allow us to recognize a variety of materials and let us distinguish a heavy, cold piece of iron from a light, soft feather. These abilities, that are so natural and spontaneous for living organisms, are not easy to replicate in artificial machines.

Soft robotics, a special branch which links technology and engineering, partly addresses these limitations by fitting robots with compliant bodies, i.e., structures that are adaptable and flexible. Unlike their rigid counterparts, soft robots are composed of flexible materials which allow them to adjust to the surroundings and interact with objects by deforming their shape, bending, and twisting their structure. However, although these properties already represent a step forward in the field, soft robots are not able to emulate an important capability: sensing.

Sensations like touch, pain, the ability to feel temperature, as well spatial awareness, are all elaborated by the human brain through the somatosensory system, a special area of the external cortex. Scientists strive to reproduce this special ability and create artificial intelligent systems that are able to integrate perception and motility. This might sound very sci-fi, but a group of researchers based in Singapore have done just this.

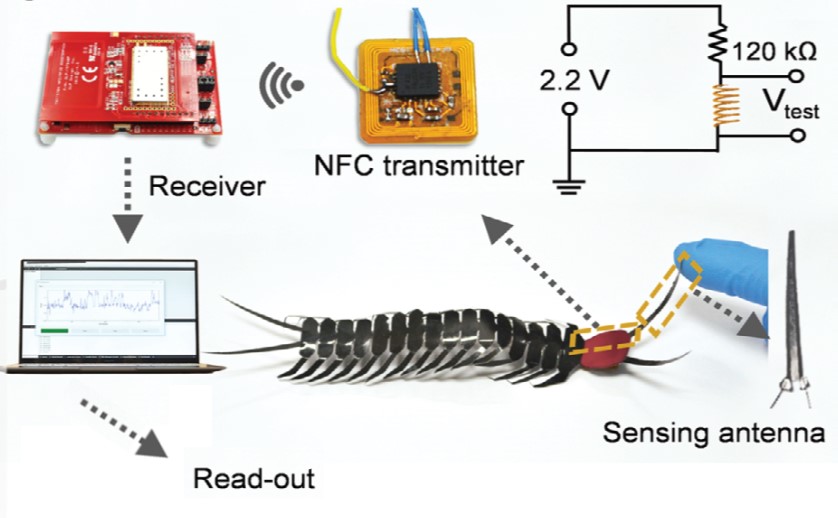

How is this possible, you might ask? This special robot, created by Professor Ghim Wei Ho and her collaborators, is able to detect external stimuli, such as light, as a “somatic sensation” (or bodily sensation) and convert them into movements. Similar to what happens when we retract our hand under the sensation of intense heat, the robot responds to temperature variations or light by moving.

“The thin film robots in moving state can simultaneously sense strain and temperature, providing complex perceptions of their body status, as well as the surrounding environments” said Ho. “Essentially, it’s an embodied intelligence in a single thin film material — one that can sense, process and respond to its environment without tethering any sensing and processing electronic components.”

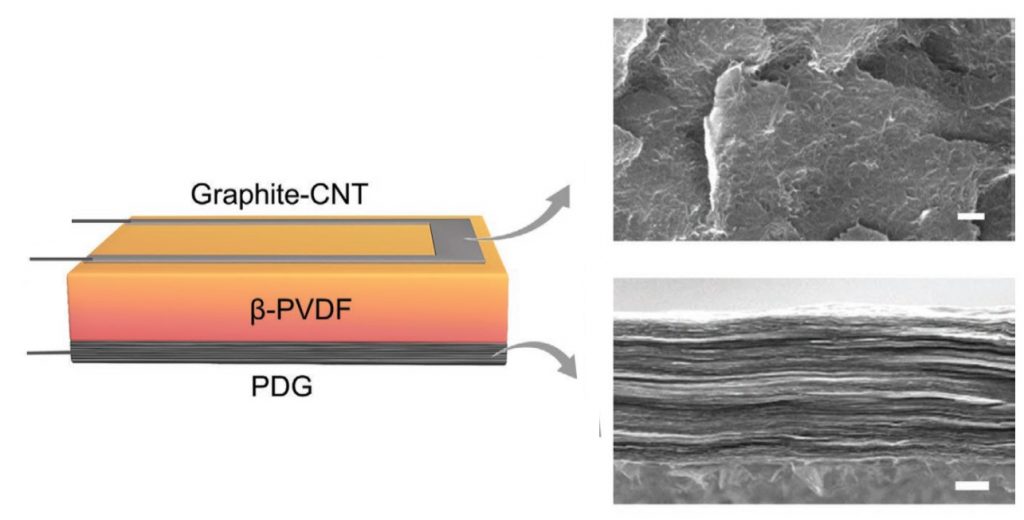

The robot’s “somatic system” was made using a thin film that combines three different materials: a photothermal layer made from a material called photothermal polydopamine reduced graphene oxide (or PDG), a ferroelectric layer called poly(vinylidene di‐fluoride) (PVDF), and a conductive graphite–carbon nanotube composites (graphite-CNT).

Within the device, the PDG photothermal and PVDF ferroelectric layers work together, making what the teams calls a bimorph actuator. The photothermal layer has the ability to convert light into heat, which is then detected by the PVDF layer and changes its internal potential, allowing the entire system to bend.

Easily customizable thanks to the versatility of the 3D printing and the kirigami technique, the group of researchers has been to overcome one of the major challenges of this field of soft actuators: the fabrication of centimeter-sized robots.

“We are now still working to solve the problem of close-loop feedback control between the sensors and actuators, looking forward to developing small-sized robots capable of making their own decisions,” the authors explained. “We hope to achieve soft robots that can finally work autonomously, response and adapt to changing environments alike living organisms.”

Ho believes that this research will have direct impact and tangible outcomes in numerous fields and applications. “This research pushes forward the possibility of machines adept at handling unpredictable situations with safe physical interactions and unprecedented functions,” she said.

Reference: Xiao-Qiao Wang et al., Somatosensory, Light‐Driven, Thin‐Film Robots Capable of Integrated Perception and Motility, Advanced Materials (2020). https://doi.org/10.1002/adma.202000351

This article also appears in “Celebrating Excellence in Advanced Materials: Women in Materials Science“